How do Smart Assistants Work Under the Hood?

Updated on 28th Aug 2020 15:06 in General, Smart

It seems every device these days include a virtual assistant, from mobile phones to desktop computers and more recently even regular looking speakers. All this is very nice and can surely come in handy now and then but what are they really and how do they work?

Table of Contents

What is a smart voice assistant?

You likely have already heard one of two of these devices such as Siri, Alexa, or Cortana, just to name a few. They exist to enable users to have vocal conversations with their devices, allowing increased ease of use and accessibility. They can be used to perform many tasks such as set reminders, timers, or with smart home integration, they can even turn on the lights or lock the door with a simple request.

How do smart voice assistants work?

There are many different steps involved in each voice assistant, and the exact methods will vary a lot from one system to the other, but generally, they will do something similar to what's covered here. To be precise, we're going to be basing the steps on open source assistant called Mycroft, which likely functions in similar ways to big-name commercial products while being totally free for viewing and understanding.

Hearing your voice

Many smart voice assistants come in the form of a "smart speaker" which is often advertised as being primarily a speaker that has the convenient feature of offering a voice assistant as well. They come equipped with a microphone that allows the device to listen to you talk and create recordings for the following steps to be able to understand what the request is.

An interesting note about the microphones is that they are actually multiple microphones. While there is probably some assistant that makes use of one single device to capture the audio of your voice, generally they will include an array of microphones to increase the quality of the recordings. Arrays offer many clear advantages over other options as they will be able to capture sound coming from any direction around the device.

Many microphone arrays will also include algorithms for removing ambient noise from the recording, which it can do thanks to its multitude of inputs. Depending on the device, some can even actively pick which direction the voice is likely coming from allowing it to turn down the data from the other directions. While this might sound like an expensive gimmick, reducing noise and ambient sound at this stage makes things easier later on.

allowing for more accurate detection

Understanding what you are saying

The system will need to know what you said, that is a given, but how does it actually transform the spoken words into something it can use to perform actions? Before any real action can be taken, the device needs to turn the audible words in the recorded audio clip into text which it can then use to call people, lookup terms, set reminders and all sorts of other fun things.

Unfortunately, the process of converting speech to text is not an easy one. It has been worked on for years by the most prominent companies with relatively good progress. Still, after spending so many resources on developing this technology, all of these options are closed source with very little transparency in some cases. The reason large corporations have been leading this market is that to have a decent system, a tremendous amount of data is required.

In fact, Google uses over 100,000 hours of audio data in their speech to text system. Most Speech to Text (STT) systems make use of machine learning to learn what each word sounds like spoken by different people, accents, and sound atmospheres. Thinking of all the different combinations of parameters that can affect the way a given sentence sounds to a microphone, it becomes evident that a large quantity of data will be required to get anything nearly decent.

One open option that you can check out right now is DeepSpeech, which is being implemented by Mozilla STT on Github. They offer pre-trained models that work pretty well, despite making use of a lot less data than commercial options. Unfortunately, the large quantity of data required and the complexity of the software used make companies that have managed to get a STT system working protect it very carefully.

Making a decision

This step is likely the one that will see the highest degree of variance across the different available systems, as decision making is at the core of the assistant and is essentially its "personality". Mycroft makes use of a skill system that allows developers to create extensions of the core functions, allowing them to register actions to any text returned by the method. When it receives some text from the STT service, it will place it onto a central message bus that every skill has access to. Each skill can then make the decision about if it can provide an answer for the query.

When a skill detects a sentence it is familiar with, it will process the text and take the necessary actions. Though this system suffers from skills "arguing" over who is going to handle a given request, generally it works well as long as each skill only attempts to handle specific queries. Unfortunately, most skills require particular sentences to trigger, such as saying "turn room light off" versus saying "Please turn off the room light".

Decision making can be very complicated, which is why this limitation is often in place. While it is not something we think about, there are a lot of ways to say the exact same thing. We have words to change the tone of a sentence, words to add respect, and sometimes words that have no effect on the sentence's action. This is why it is not enough for the system to understand the text commands, it must understand the intent behind it.

The intent is still tricky to get right, most commercial products will still struggle with this as of writing. As such, for now, the best bet is to accept that there may be only one way to tell your smart assistant to turn on the light and to always use it.

Responding to you

The final step is to respond with the appropriate information or confirmation, depending on what was requested. This function makes use of Text to Speech (TTS) technology to generate sound clips of spoken words based on the inputted text. These systems are a lot more advanced than speech to text as they have been heavily developed for all sorts of purposes. While it is more developed than STT, text to speech is still quite complicated to get right, and one common issue is that the resulting voice sounds too robotic.

A common type of TTS that usually produces voices that sound too "robotic" is called unit selection, a system in which speech is created by using audio clips from each word and stitching them together in the best way it can. Another option is some form of synthesised speech, such as the one created by Mozilla TTS. These make use of deep learning to create a much more realistic sounding voice. Things such as the timings between words are often much better in this type of system, among other improvements.

Finally, once a TTS service has created a sample, it will play it out using a speaker, allowing the user to hear the response. One interesting note is that during my experiments, I found that TTS was one of the slowest parts of this entire process. Using the Mozilla TTS I got a voice that sounded amazing, but unless I ran the software on my powerful primary computer (using a GTX1080) the time to create an audio clip took over 15 seconds!

Even while using commercial APIs I found the delays added by uploading audio to a remote server, processing the data, sending it back, sending a text to synthesise, downloading the resulting sound were sometimes uncomfortably long. I think if it is possible the best solution for a really excellent voice assistant is to have all of this processing done locally, as when I did that the response times fell to be well under 3 seconds for the entire process.

Summary

Overall, smart assistants work by using microphones to record audio of a user speaking a request, preferably using multiple microphones to allow for a noise reduced sample. They then upload the clip to a Speech to Text (STT) service that will convert the provided sound clip into text. The system then uses the provided text to make a decision on what action it will take and how it will respond. Finally, it sends the text generated by the decision making logic over to a Text to Speech (TTS) service that converts the provided text into spoken words in an audio clip.

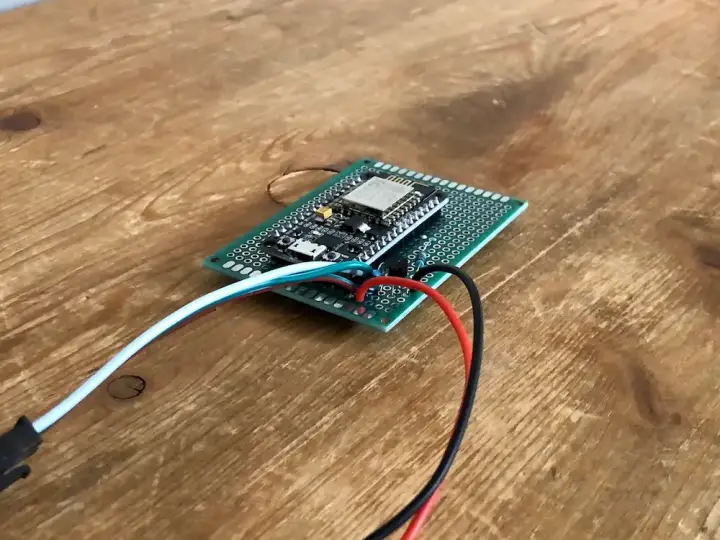

Hopefully, this helps you understand the basics of smart voice assistants. They have an almost endless level of complexity if you look for it by diving down into each component. Still, at a surface level, they operate on a relatively simple principle. Make sure to come back soon to check out my DIY voice assistant build using Mycroft!